Understanding Above-Ground Biomass: Its Importance and the Role of Satellite Data

What is Above-Ground Biomass?

Above-ground biomass (AGB) refers to all the living plant material above the soil surface, including trees, shrubs, and grasses. This biomass represents a significant component of forest ecosystems and is crucial in understanding the overall health, productivity, and carbon storage potential of these environments.

Why is Measuring the Above-Ground Biomass Important for Tree.ly?

Forests play a vital role in the global carbon cycle by absorbing carbon dioxide (CO₂) from the atmosphere and storing it in their biomass. Globally, the AGB is the second biggest carbon sink (besides the ocean) and hence, significantly helps to mitigate climate change. At Tree.ly, this metric is used to calculate the carbon storage potential of a forest and to monitor annual changes in biomass.

How is Above-Ground Biomass Calculated?

Traditionally, AGB is estimated through ground-based methods, such as forest inventory plots where tree diameter, height, and species are measured. Data is then used in allometric equations to estimate biomass. However, these methods are labor-intensive, time-consuming, and limited in spatial coverage, resulting in the data being only available every 5 to 10 years.

Aerial laser scanning (ALS) based models are another method widely used to estimate AGB, offering higher accuracy than traditional ground-based methods. ALS data is often publicly available as the collection of nationwide datasets is normally carried out by state institutions. However, the datasets are infrequently updated (in the best case every few years) and are normally not harmonized across states or not even on a state level. Data collection depends on plane or drone flights and is expensive, especially when carried out for large areas. Despite some challenges, ALS-based methods are well established in the industry as well as in scientific projects.

While satellites like Sentinel-1 and Sentinel-2 offer valuable data on vegetation structure, canopy density, and spectral properties for large-scale AGB estimation, current methods still lack the accuracy required for reliable use. With continued research and refinement, we are confident that these satellite-based approaches will eventually achieve the precision needed for practical application in forestry and carbon monitoring.

The Advantages of Automating AGB Calculation with Satellite Data

The ability to estimate AGB automatically using satellite data offers several advantages:

-

Cost-Effectiveness: Automated AGB estimation using satellite data reduces the need for extensive fieldwork, making it a cost-effective solution for large-scale biomass monitoring.

-

Large-Scale Monitoring: Satellite data allows for the monitoring of vast forested areas, including remote and inaccessible regions, which would be impossible to survey using traditional ground methods.

-

Frequent Updates: Satellites like Sentinel-1, with a revisit time of 6 days, enable regular monitoring of forests, providing up-to-date information on changes in biomass. This is particularly useful for tracking deforestation and forest degradation in near real-time.

-

Consistency, Comparability, and Reduced Errors: Satellite-based methods provide standardized data that can be consistently applied across different regions and time periods, facilitating comparisons and trend analysis. Additionally, by eliminating human involvement in data collection, the risk of measurement errors is significantly reduced, ensuring more accurate and reliable results.

Sentinel Satellites for Earth Observation and Environmental Monitoring

The Sentinel satellites, developed and operated by the European Space Agency (ESA) for the Copernicus program, are key assets in Earth observation, providing free and publicly accessible data. The Copernicus program is an initiative of the ESA and the European Commission.

Sentinel-1:

-

Launch Date: April 2014

-

Imaging Technology: Radar satellite

-

Mission Composition: Originally two satellites: Sentinel-1A and Sentinel-1B. The mission for Sentinel-1B ended in 2022 due to a technical defect.

-

Orbit Altitude: 693 km

-

Capabilities: Sentinel-1's radar instrument can penetrate clouds and rain, and it can operate both day and night. It supports four imaging modes with resolutions as fine as 5 meters and coverage up to 400 km.

-

Applications:

-

Land Monitoring: Agriculture, forestry, and land subsidence

-

Marine Monitoring: Sea-ice levels and conditions, oil spills, and ship activity

-

Emergency Response: Flooding, landslides, and earthquakes

Sentinel-2:

-

Launch Date: June 2015

-

Imaging Technology: Multispectral optical imaging satellite

-

Mission Composition: Two identical satellites in the same orbit, separated by 180°

-

Orbit Altitude: 786 km

-

Capabilities: Sentinel-2 captures image data across 13 spectral bands with varying spatial resolutions (10m, 20m, and 60m) and a swath width of 290 km. It offers a revisit frequency of 5 days at the Equator.

-

Applications:

-

Agricultural Monitoring

-

Land Cover Classification

-

Water Quality Assessment

-

Risk Mapping: Maritime and border surveillance, humanitarian relief operations

How to Acquire Sentinel Data

Principally, Sentinel (raw) data is freely and publicly available via the Copernicus Data Space Ecosystem catalogue. It can basically be accessed in two ways via the official Copernicus channels:

-

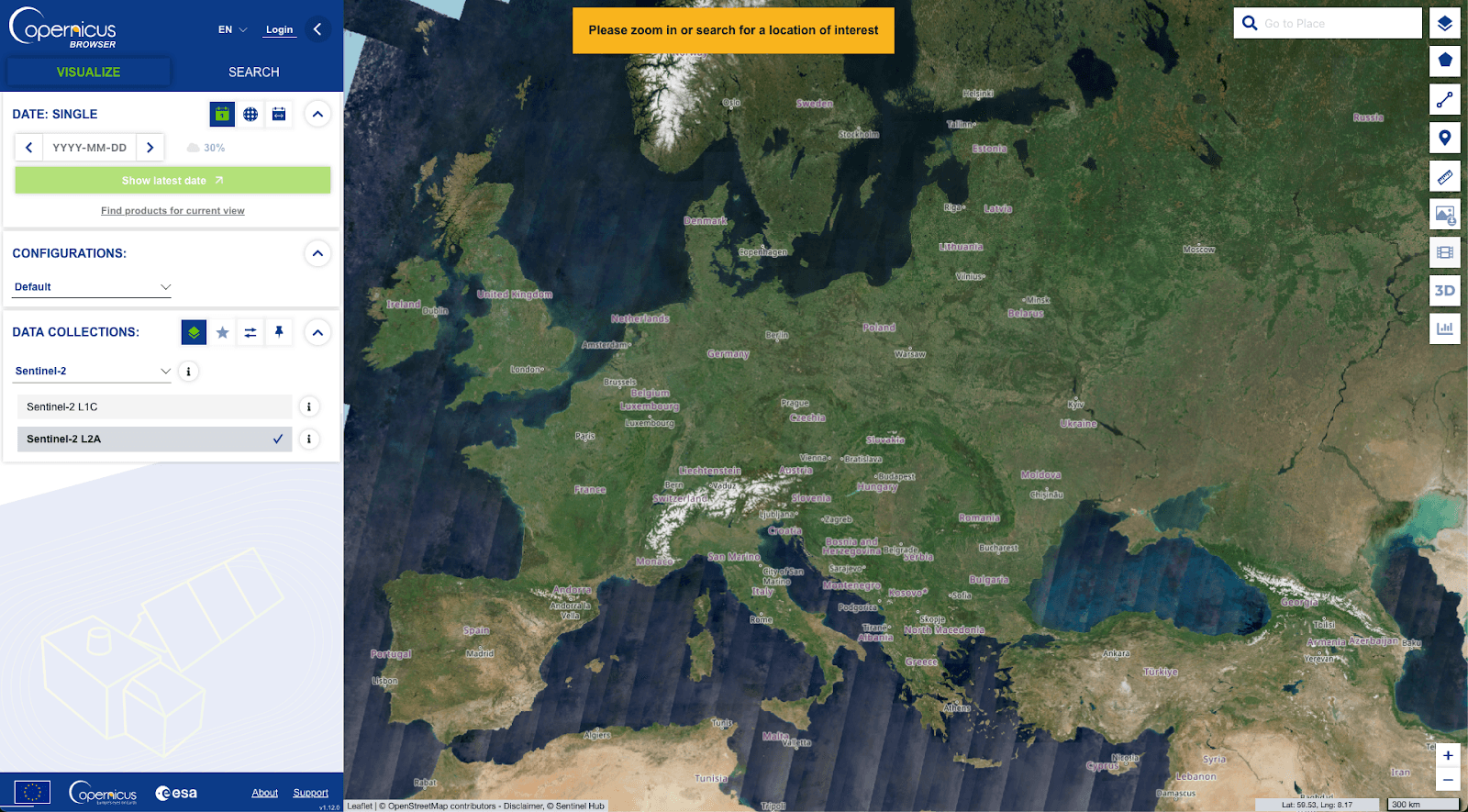

Copernicus Browser for manual search, visualization, and acquisition of small amounts of data

-

APIs for programmatic search, data acquisition, and data processing

Copernicus Browser

An easy way to manually browse, visualize, and access the data is via the official Copernicus Browser. The browser provides filters for different themes or data collections. It also gives the user the possibility to search for data in a given time frame for a specific area of interest. Hence, the Browser provides a great entry point and makes it easy to quickly assess the data availability for the given requirements.

The rich documentation can be found here.

APIs

There are several APIs available in the Copernicus ecosystem which serve different purposes. Detailed documentation of the APIs can be found here.

Catalogue APIs

These 4 REST APIs (implementing different specifications) provide the possibility to query the Copernicus Data Space Ecosystem catalogue in order to retrieve the data:

OData

OData is an ISO/IEC-approved OASIS standard that enables the creation and editing of resources identified by URLs, using simple HTTPS messages via RESTful APIs. It allows Web clients to build REST-based data services to publish and manage these resources.

STAC API

The Copernicus Data Space Ecosystem STAC API was implemented as a web service interface to query over a group of STAC collections held in a database.

OpenSearch

The OpenSearch catalogue lets you search Copernicus data via a standardized web service, returning results as GeoJSON feature collections. Each feature represents an earth observation product, pointing to the data's location.

SH Catalog API

Sentinel Hub Catalog API (or shortly "Catalog") is an API implementing the STAC Specification, describing geospatial information about different data used with Sentinel Hub.

In order to be able to call the APIs, an access token is required (registration on Copernicus Data Space).

export ACCESS_TOKEN=$(curl -d 'client_id=cdse-public' \ -d 'username=<username>' \ -d 'password=<password>' \ -d 'grant_type=password' \ 'https://identity.dataspace.copernicus.eu/auth/realms/CDSE/protocol/openid-connect/token' | \ python3 -m json.tool | grep "access_token" | awk -F\" '{print $4}') printenv ACCESS_TOKEN

The example below queries the OData API for SENTINEL-1 products acquired in the first quarter of June 2015 in Vorarlberg and downloads the actual data in a compressed format.

import requests import os import pandas as pd # Fetching data json_response = requests.get("https://catalogue.dataspace.copernicus.eu/odata/v1/Products?$filter=Collection/Name eq 'SENTINEL-1' and OData.CSC.Intersects(area=geography'SRID=4326;POLYGON((9.327124566949436 47.63857765066646, 9.327124566949436 46.72797959154448, 10.492558252813893 46.72797959154448, 10.492558252813893 47.63857765066646, 9.327124566949436 47.63857765066646))') and ContentDate/Start gt 2015-06-01T00:00:00.000Z and ContentDate/Start lt 2015-06-07T00:00:00.000Z").json() df = pd.DataFrame.from_dict(json_response['value']) product_ids = df['Id'].tolist() # Define access token access_token = os.getenv("ACCESS_TOKEN") # Ensure that ACCESS_TOKEN is set in your environment variables # Create a session session = requests.Session() session.headers.update({"Authorization": f"Bearer {access_token}"}) # Function to download each product by ID def download_product(product_id): url = f"https://download.dataspace.copernicus.eu/odata/v1/Products({product_id})/$zip" response = session.get(url, stream=True) if response.status_code == 200: with open(f"{product_id}.zip", "wb") as file: for chunk in response.iter_content(chunk_size=8192): if chunk: file.write(chunk) else: print(f"Failed to download file with ID {product_id}. Status code: {response.status_code}") print(response.text) # Loop over each ID and download the corresponding product for product_id in product_ids: download_product(product_id)

Streamlined Data Access

The Streamlined Data Access APIs (SDA) enable users to access and retrieve data from the Copernicus Data Space Ecosystem catalogue. These APIs also provide a set of tools and services to support data processing and analysis.

Sentinel Hub

Analysis-ready data, Processing API, Statistical analysis API, COG hosting as API, Support for JS, Python

Pricing

30 days free trial

OpenEO

Analysis-ready data, Out of the box functions to preprocess data, Processing cloud environment, Hosted JupyterLab instance, Support for JS, Python, R

Pricing

Free tier that allows usage of openEO with a fixed amount of resources that can be consumed on a monthly basis

Access Data via S3

There is also the possibility to access data from the Copernicus Data Space Ecosystem collection directly via S3. In order to access the data, a user needs to generate access secrets in the user account settings.

Example how to download data from S3 with Python:

import boto3 import os session = boto3.session.Session() s3 = boto3.resource( 's3', endpoint_url='https://eodata.dataspace.copernicus.eu', aws_access_key_id=access_key, aws_secret_access_key=secret_key, region_name='default' ) # generated secrets def download(bucket, product: str, target: str = "") -> None: """ Downloads every file in bucket with provided product as prefix Raises FileNotFoundError if the product was not found Args: bucket: boto3 Resource bucket object product: Path to product target: Local catalog for downloaded files. Should end with an `/`. Default current directory. """ files = bucket.objects.filter(Prefix=product) if not list(files): raise FileNotFoundError(f"Could not find any files for {product}") for file in files: os.makedirs(os.path.dirname(file.key), exist_ok=True) if not os.path.isdir(file.key): bucket.download_file(file.key, f"{target}{file.key}") # path to the product to download download(s3.Bucket("eodata"), "Sentinel-1/SAR/SLC/2019/10/13/S1B_IW_SLC__1SDV_20191013T155948_20191013T160015_018459_022C6B_13A2.SAFE/")

Other Platforms

There are also other platforms apart from the Copernicus Data Space Ecosystem providing access to Sentinel data products as well as offering processing infrastructure.

-

Google Earth Engine | Monthly fee + usage of resources (Google Earth Engine Pricing)

-

EODC | On demand pricing

Conclusion and Outlook

It is clear that the use of satellite data offers a powerful tool for automating the calculation of Above-Ground Biomass (AGB) on a large scale by providing access to substantial amounts of valuable information.

When it comes to the acquisition of Sentinel raw data, the Copernicus Data Space Ecosystem offers several good access points as described above. Each of them can be easily integrated into a semi-automated workflow to acquire freely available raw data programmatically.

However, to use Sentinel data for AGB estimation models, the data needs to be preprocessed, or analysis-ready data products need to be obtained. In general, private companies do not get granted access to these data products for free, unlike scientific institutions or non-profit organizations. Hence, for a company like Tree.ly, this involves high costs either due to the use of the processing environment or the purchase of analysis-ready data. The decision on which steps to take next is crucial and must be carefully considered in order to implement a data pipeline that is as independent, scalable, and cost-efficient as possible, as this forms the foundation for the implementation of automating AGB calculation for our use case.

Sources:

-

-

-

-

-

-

Co-authored by Clara Tschamon